Lean on a content gap analysis like a pro to find holes in your SEO keyword scope. This article starts with content gap analysis 101; if you’re looking for the deeper dives, skip to the sections on content gaps’ two biggest pain points: choosing content gap analysis competitors wisely |+| smart diagnostic metrics to tame content gap data for the best results.

What is a content gap analysis?

You gather data on a few competitors’ digital messaging successes. What do they rank for in Google, and how well? (That’s the “content” part.)

How does your site compare? Where are you lacking? (That’s the “gap“.)

And which of those keywords are worth targeting? (That’s the “analysis” component.)

Unfortunately, two big things can derail content gap analysis fast: picking the wrong competitors and getting buried in too much data. After some quick 101, I’ll tackle both. First, how to choose better comps. Then, how to use smart diagnostics to shrink your review from tens of thousands of lines to just a few hundred.

Sources for content gap analysis data

Several SEO data vendors, including SEMrush, Ahrefs, Moz, Spyfu, and others, provide content gap analysis data. Rather than pick a horse or pay hefty monthly fees for several vendors, I usually engage with an intermediary third party to gather data from multiple sources.

I can quickly scan through them to choose the best source. Rarely, I’ll end up combining data from multiple vendors. (Ablebits and/or AI make that easier than it otherwise might seem, but it’s still cumbersome and usually not worthwhile.)

What sort of data do I get?

All sources will start with a keyword per line in the first column. You’ll also get the rank and associated ranking URL for you and your comps.

Vendors then deliver some combination of the following:

- monthly search volume

- # of Google results

- avg CPC spend

- intent

- SERP features

- various competition metrics (often branded “difficulty” or “density”)

- engagement metrics (like clicks per search/CPS)

- and so on

Another differentiator among vendors is quantity and quality of data, but that’s beyond the scope of this already pedantic piece! What to do with all of this data? We’ll cover that in the diagnostic metrics section. First, let’s ensure your data is as meaningful as possible by seeding the process well.

How to choose content gap analysis competitors?

You know what a content gap analysis is, and where you’re getting data. Paste some competitor URLs (and yours) in there, click the “go!” button, and you’re off to the races!

Not so fast.

Picking suboptimal competitors to seed this research is an easy mistake. When you choose poorly, you’ll:

- Clutter next steps with noise in the signal

- Miss opportunities (gaps) offered by ‘better’ comps

- Waste time cursing too much dirty data

- and otherwise distort your content strategy lens

So let’s discuss the top two considerations when picking content gap competitors.

1. Success outside of AI/Google ≠ good content gap signal

That’s a does-not-equal sign, kids!

We’re more concerned with the upstart brand that’s kicking ass in Google and AI than the much larger old-guard company resting on past laurels and momentum. A good competitor for this exercise should rank well; their name recognition and success IRL (or lack thereof) often have squat to do with choosing quality competitors for a content gap analysis.

Yes, sometimes the biggest names in the space will be the best choices through this first lens, but there’s often another reason to avoid them…

2. Generalist-giants with sprawling offerings flood gap analyses with noise

Unless you’re apples-to-apples with your big-name comps, their broad reach will poison the data with noise you don’t care about.

Example: Your indie productivity app startup (let’s call it Focra, no, wait, ThinkBin maybe?) wants to analyze competitors for SEO opportunities. Hmm, Microsoft seems to rank for a bunch of productivity keywords; it’s probably smart to add them to the competitor pile?

Nope.

Save yourself from looking through tens of thousands of lines of worthless data about Windows, hardware, gaming, and everything else MS does that isn’t productivity-centric. Even their productivity-focused products are super-broad and all-encompassing.

Dang. If the obvious comps I think are my best competitors likely won’t help here, how do I find the best competitors for content gaps?

What we’re looking for in content gap competitors

Apples-to-apples + well-represented in digital messaging = the ideal competitor

In lieu of that, (“nobody’s quite like us!”) go super-narrow when defining comps. Run multiple content gap analyses, one for each corner of your biz.

It doesn’t matter if your content gap competitors’ intent doesn’t align directly with yours IF they’re ranking for keywords well targeted for your goals. For example, a well-focused educational/informational site could potentially be a good comp, if their messaging is on point.

Sticking with the Focra/ThinkBin productivity app hypothetical (let’s go with Focra, the domain name is for sale):

Our amazing productivity app is only slightly nimble; we say we’re “task management meets note-taking+organization”. Focra can be used for personal or business use cases. Oh, and we have both solo and team options. We don’t mess around with time-tracking, CRM, sales, HR, etc.

So we’ll likely find tighter competitors by segmenting them into several bins matching our core use cases:

- Solo-personal, task-focused – Todoist, Things, TickTick

- Team-biz, collaborative docs – Nuclino, Tettra, Slite

- Solo-biz, structured, hybrid work-life use – NotePlan, Craft, Amplenote

Are those good comps? Does this hypothetical even make sense? Honestly, I don’t know. Focra doesn’t exist, and I’d never heard of those nine brands before writing this. But it’s the thought that counts! (Future-Focra, if you’re out there, I know I can do better!)

How to find content gap competitors

Established brands can get competitor-idea help from data vendors like SEMrush, Ahrefs, Similarweb, or even audience research tools like SparkToro. Alas, their data usually ignores the crucial giant generalist caveat we discussed earlier, so I use them sparingly.

In this exercise, we’re assuming Focra is a startup, so we likely can’t lean on those tools anyhow. What to do?

- Google (and Bing?) the super-obvious topics you want to cover. Be specific. Go long-tail. You’ll see a pattern of usual suspects in the conversation. They’re potentially good targets.

- Have a conversation about targeted comps with ChatGPT and other LLM-based AIs. (You can even copy and paste the aforementioned caveat section of this article into your prompt.) Those AIs are trained on web data, and search on their own.

- Need more? Use a service like Reddaddo to sift through context-rich Reddit discussions. Reddit threads regarding alternatives to your known competitors, or solutions to known problems you purport to solve, etc. (Disclosure: I cofounded Reddaddo.)

I got all of the Focra comps from ChatGPT after conversing about our hypothetical for a few minutes. Again, I have no idea if they’re “good”, but they’re good enough for our hypothetical. (Hypothetically good!)

Comps defined? Check. What’s next in the content gap experience?

Now you can feed your great competitors into your chosen data vendor/s. Note: vendors impose different limits on how many sites you can do per content gap. Five is a common limit.

Click “go” and…

Oh holy $#!%. That’s an epic load of data! How do we make sense of all of the data from content gap analyses? Even when you pick your competitors well, most of your data is worthless noise. You’ll find copious competitor-branded keywords, and try not to get lost in the ultra-obscure non-targeted long-tail headscratchers. (“Why is THAT keyword in there?” Spoiler: not worth your time scratching that itch.)

Back in 2010 when I first wrote about keyword research and metrics on this blog, I was mostly bemoaning the SEO industry’s opaque research metrics, inconsistent definitions, and a lack of transparency or standardization.

Some things never change.

That aging article blathered about the then-popular Keyword Effectiveness Index (KEI), which we seldom hear about anymore. Meanwhile, the SEO world has moved on from that flawed metric to other flawed keyword research metrics:

- Moz offers a “Priority” score – combo of volume, CTR and difficulty

- Ahrefs and SEMrush offer versions of “Keyword Difficulty” based on backlinks of top-ranking pages

- And other vendors are in the same ballpark

None of these metrics cuts through the considerable keyword noise.

Let’s make some that do. Shovel your way out of content gap hell with this heavy equipment!

Gap Analysis Keyword Triage: Ad Hoc Diagnostic Metrics

We’ll assume you’ve already filtered out the many competitor-branded keywords from your data, and maybe you also decided to ax ultra-terse keywords below a certain length. But you still have thousands. Here are some of my go-to diagnostic metrics to help tired eyes weed through the data.

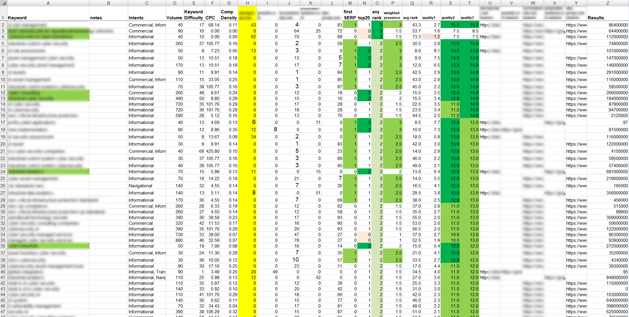

If you want to follow along at home, please reference this heavily redacted spreadsheet. We’ve changed all names and keywords to protect the innocent.

Open the content gap in your favorite spreadsheet tool and create the following new columns in the spreadsheet: I include example Excel formulas from my sheet below, in case that’s handy. (With the note that I enjoy using an 18-year-old version of Excel, and some of these computations can be done more elegantly and concisely with dynamic arrays and conditional aggregators in newer versions.)

- first SERP – How many of the comps are on the first Google SERP?

Leave your site’s data in there? It’s just as easy to omit it, or have two columns, one for comp+you, one for comps only.=COUNTIFS(H2:L2, ">=1", H2:L2, "<=10")– (Ranking data is in columns H through L.) - top-20 – # of comps ranking anywhere in first two SERPs

=COUNTIFS(H2:L2, ">=1", H2:L2, "<=20") - any rank – # of comps ranking in top 100

=COUNTIFS(H2:L2, ">=1", H2:L2, "<=100") - Weighted Presence – Single metric hinting at comps’ combined SERP presence

=(M2*2)+((N2-M2)*1)+((O2-N2)*0.5)– This analyzes the previous three metrics. Better, I think, than analyzing the ranking data directly, which over-rewards keywords ranking for only a single site. - Avg. Rank – Average rank, of ranking comps

=IF(COUNTIF(H2:L2,">0")=0,"",AVERAGEIF(H2:L2,">0"))

Avg. rank is not ideal alone; most keywords rank for a single site. But it sometimes works as a component of other diagnostics… - Quality1 – A weighted score balancing: SERP presences, avg rank, CPC, and competition metrics.

=IF(AND(P2>0,Q2>0), ((1 + (IF(F2>2,0.5,0))) / ((P2*1.5)+(Q2*1)+(G2*0.5)+(E2*0.5)))*100, "")

Attempts to remove or replace the Avg. Rank from this formula usually return garbage instead of the value it delivers in this form, which is why I kept the avg rank metric around.

¯\_(ツ)_/¯ - Quality2 – Scores keywords on: # of and tiered quality of competitor ranks, bonuses for CPC and presence

=IF(COUNTA(H2:L2)=COLUMNS(H2:L2), SUMPRODUCT((H2:L2>0)*(H2:L2<=5)*5 + (H2:L2>5)*(H2:L2<=10)*4 + (H2:L2>10)*(H2:L2<=20)*3 + (H2:L2>20)*(H2:L2<=100)*2) + COUNTIF(H2:L2,">0")*0.5 + IF(F2>=2,5,0), "") - Quality3 – Another metric derived from ranks, CPC, and volume, minor penalty if overly competitive.

=IF(COUNTA(H2:L2)=COLUMNS(H2:L2), SUMPRODUCT((H2:L2>0)*(H2:L2<=5)*5 + (H2:L2>5)*(H2:L2<=10)*4 + (H2:L2>10)*(H2:L2<=20)*3 + (H2:L2>20)*(H2:L2<=100)*2) + COUNTIF(H2:L2,">0")*0.5 + IF(F2>=2,5,0) + IF(D2>=501,3,IF(D2>=201,2,IF(D2>=51,1,0))) - IF(G2>=0.6,3,IF(G2>=0.3,1,0)), "") - q4 – Combines volume, CPC, competition, and presence, with light penalties for oversaturation or irrelevance.

=IF(Z2="","",IF(D2>0,LOG10(D2+1)*2,0)+IF(F2<>"",MIN(F2,105)/105*3,0)+IF(E2<>"",E2*0.25,0)+IF(P2<>"",P2*3,0)-IF(Z2<10000,3,IF(Z2>2000000000,3,0))-IF(COUNTIFS(H2:L2,">0",H2:L2,"<=30")=0,10,0)) - weak? – A quick cut-metric to eliminate keywords scoring poorly on several other diagnostics. Easily tunable.

=IF(AND(M2<1, N2<1, P2<1, R2<3, S2<3, T2<3), "weak", "") - “Gapiness” is something I also try to quantify with one or more metrics. Measures of how big the gap is. I keep these off to the right of the sheet, so if I see a flash of green while I’m triaging using other metrics, I know there’s a good gap–in addition to the value hinted at by other metrics. (This stuff is geeky, sorry!)

- Other metrics? Sure!

I might sometimes rely on outliers in # of Google results (Z) as a minor penalty in a diagnostic metric, like:=IF(Z2="", "", IF(D2>0, LOG10(D2+1)*2, 0) + IF(F2<>"", MIN(F2,105)/105*3, 0) + IF(E2<>"", E2*0.25, 0) + IF(P2<>"", P2*3, 0) - IF(Z2<10000, 3, IF(Z2>2000000000, 3, 0)))

…but in this most recent CGA, I didn’t love the results. - And so on. (Here’s that link to the content gap spreadsheet again if you want to see the diagnostics in action.)

There isn’t a single god-tier metric to identify worthy keyword candidates, but creating and using scores like these makes the keyword whittling task far easier.

How do I triage with these content gap diagnostics?

Sort your sheet by each new diagnostic column to see what rises to the top. Create a new “notes” or “next round” column and enter notes there for keywords that warrant further consideration. Different metrics will highlight different keywords, which is the point.

These diagnostics allow you to focus on keywords with the highest chance of relevance. When you create a decent spread of opportunity scores, it more than justifies ignoring thousands of keyword candidates. They’re duds.

Once your CGA triage is through, what’s next? Turning shortlists into strategy is another spreadsheet, another article. Another time. But read on for more nuance, ideas, and caveats.

Ideas for other metrics to filter content gap data

Try making new metrics! I use different diagnostics depending on the goals. Don’t be afraid to fail. In decades of keyword research, I’ve left countless formulas on the cutting room floor when they failed to bring quality to the top.

- Are you tight on budget and/or low on SEO-confidence and looking for easy opportunities? Craft a meta-metric that rewards keywords with low competition and difficulty, and then filter for your competitors’ successes.

- Dive deeper into competitors’ most powerful pages; craft a formula to find the most commonly appearing comp URLs. Filter it for quality.

- Modify existing metrics to penalize keywords for which we’re already ranking particularly well. Or for when we’re already in the fight. Related: I added a few “gap” columns to the far right of the spreadsheet, but they’re tuned for a more recent CGA and therefore don’t excel at finding gaps in this sheet. Tweaking needed.

- etc.

How else can we use a content gap analysis?

- During mergers and acquisitions: Use content gap analysis for content triage to avoid losing valuable organic presence when folding two sites together. Too often, valuable content ends up being deleted or neglected.

- Market discovery tool: Uncover unmet demand or niche use cases to inform product strategy.

- Impress friends: with pretty, convoluted charts

Content Gap Diagnostics Caveats

Good diagnostics from one CGA don’t necessarily translate to another

Your existing formulas are a good jumping-off point, but thresholds therein contributing to actionable metrics from one dataset might need some tweaking to work well in another dataset. That’s hinted at in those gap-measurements I belatedly added to the right of the demo worksheet. And here’s a recent related lesson I learned regarding formula continuity…

Always use the same number of competitors

Diagnostic metrics sometimes don’t translate well to analyses with a different number of competitors. E.g. in a recent CGA, I used three comps instead of four for one of the five CGA topics. The metrics were skewed. Now that I have a decent stable of metrics tuned to four competitors, I’ll avoid using fewer if I can justify using four comps every time.

Don’t blindly trust suspect data

In my demo spreadsheet, you’ll see some outlandish CPC values, suggesting that organizations are paying almost $1000 for some clicks. I checked those outliers with Google Ads’ data; they’re off by at least an order of magnitude. Verifying all data is tedious and not worth it, but if it’s easy to spot-check a few outliers, consider doing it.

Consider combining multiple data sources

In the rare cases when accuracy is crucial, combine data from two sources, and devise discrepancy metrics and/or combine the two sources to get averages for each keyword. The latter is faster but less elegant. E.g., using the CPC outlier example above, averaging a CPC of $1000 and $50 is still bonkers-inaccurate compared to the truth.

Alas, truth is fickle quarry.

Dan Dreifort content-gaps. New verb! He also keyword weights and SEO blurbs. He selectively bolds keywords, but he doesn’t SEO forecast! You can’t follow him on Insta or X because ain’t nobody got time for that! But you can suffer his wannabe noise-art on Soundcloud or YouTube.